What you ask is what you get

Unnecessary survey questions, improper setups, leading questions, and the ABC/WaPo poll

Back in the dark ages, as I was finishing my bachelors at Purdue, I took a class in questionnaire construction. Fifteen weeks on the minutia of question wording, font size, white space, mailing strategies, and coding mechanisms.1

Being before the internet, everything was about mail questionnaires. How do you write a good cover letter? How do you track non-response bias? Does non-white paper work better than white paper? Is is a good idea to write your survey on 11 X 14 paper and shrink it down to 8 1/2 X 11?2

In addition to this information proving useful when I’d teach the survey section of my research methods classes, it has also primed me to be on the lookout for problematic questions. With the plethora of polling that now passes for information, this has become a particular challenge. I’m the first to admit that I’m overly conscious of these issues — as anyone who has ever tried to get me to finish a phone survey can attest!

But I still think it’s important. Because people writing about the polls and the people writing about the people writing about the polls and the people worrying about what the those people wrote and what it might mean seems to be a merry-go-round of repetition without considering how the questions might influence responses.

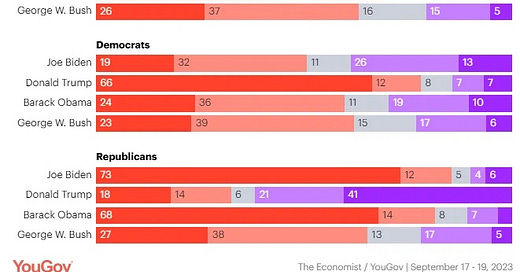

This post was initially prompted by something shared by social media friend Dan Cox on X on Friday. Dan is the director of surveys for AEI and writes an excellent SubStack called American Storylines. He had shared the following screenshot from a recent YouGov poll.

My gripe about this data is that it asks for an opinion on something that is a matter of fact. As Dan points out, the partisan split is remarkable — which is why he shared it. Only 32% of Republicans think that Donald Trump benefitted financially while in office while 78% of Democrats thought so. But unless the purpose of the question is to show that there is a partisan divide (shocker!!) in our country, it is a useless question.

It presumes that people have some inkling of what the before and after financial status of these four presidents is/was. In the absence of any information upon which to base their judgment, it is no surprise that they simply fall back on the partisan priors.

Presidents file financial reports. Even if we only have full tax records on three of the four presidents, we still have access to that data. For example, it would not be that hard to find Obama’s financial status in December of 2008 and contrast it with his status in December of 2017. The difference between those dates would demonstrate the extent to which he “financially benefitted” from his office: regardless of what poll respondents in 2023 thought.

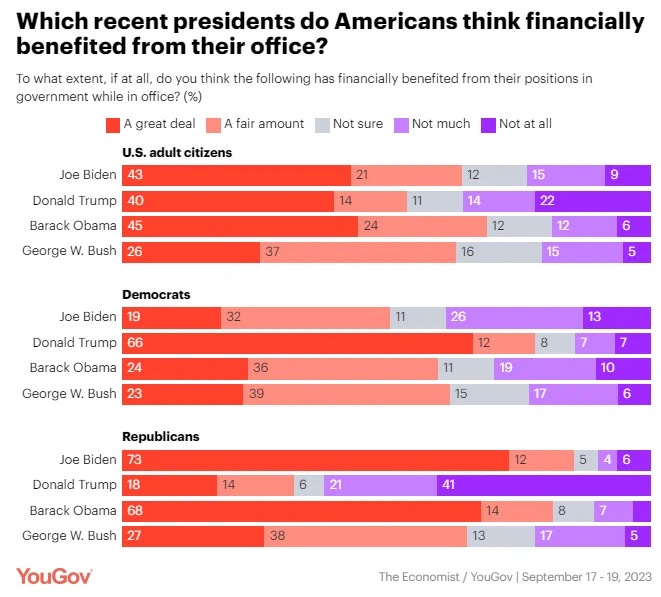

Here’s a mundane example of bad wording from a question shared by Sheila Gregoire. The question has to do with the classic “avoiding lusting after women” that shows up regularly in evangelical circles.

I don’t want to make too much of this, given how much of a leading question it is. The first and last questions are throwaways, so it’s not surprising that responses load up on the middle two. And the third option is a compound phrase, making it difficult to interpret (if someone doesn’t think it’s impossible, what are they supposed to respond?).3

You may have noticed that everyone freaked yesterday about the ABC/Washington Post poll that showed Trump beating Biden by 10 points among registered voters.4 While the story acknowledges that it’s an outlier relative to other polls,5 there are also some real issues with question wording. You can find the topline results, which includes the questions, here.

One of the questions that caught my eye has to do with the impending government shutdown. With very little setup, the pollsters asked the following question:

I’ve been reading the coverage of the shut-down very closely over the last week or so. Clearly, there is an impasse between most Republicans in the House and the members of the Freedom Caucus. The former have been calling out the obstinacy of the latter on the evening news and on social media. The Senate has been passing funding bills based on the budget agreed to in the spring debt ceiling agreement between the White House, the Senate, and the House.

So in what way is the potential (inevitable?) shutdown about “a dispute over spending and policy issues”? And shouldn’t proper analysis be focused on the intraparty fight that has kept the House from even approving debate rules? Once the question is framed generically as “a dispute”, it invites people to provide their responses through the lens of general Washington disfunction.

Marcy Wheeler, who posts on X as emptywheel, flagged another problematic (and very important) question in the ABC/WaPo survey. Two questions deal with the now-launched “impeachment inquiry”.

The first question — with its lead in “based on what you know” — seems at first glance to show opposition to impeachment. But that lead in is doing an awful lot of work. A better question would first ask if people had followed the story (with a potential follow-up on new sources!). In other words, the question asks whether people are for or against impeachment in spite of the information that might shape that opinion.

The second question is the one that Wheeler focused on. This question stipulates an inquiry rather than impeachment (without explaining the difference). More significantly, it adds the explainer “because of alleged involvement with his son’s international business dealings”. Not only does the question leave the “involvement” hanging as a potential problem, it doesn’t even stipulate that Hunter’s (unseemly) business dealings were illegal. The wording of the question creates the likelihood of the “held accountable” response.

So here’s the takeaway for those interested in doing or interpreting polling. Don’t ask people’s opinions on factual matters. At the very least, lead into the question with a quick explainer on what the facts are and then get a response. Second, don’t insert potential explainers into the question itself and, if you must, make sure that they aren’t misstating the explanatory gloss.

And, by the way, national polling fourteen months before an election is not particularly useful, especially when compared to state polls among the swing states that will determine the electoral college outcome.

I had declared a minor in psych to go along with my sociology major and it only required 12 credits of electives. My interest in research had this preferable to other psych classes (I did one on the social psych of aggression, one on motivation theory, and one on attitudes.

Answers (as I remember them): play to personal interest and promise a redundant complete confidentiality, include a returnable post-card with the mailing (although did hear a story about someone who hid identifying information under the return stamp), yes, yes. As I said, by the end of the semester, the minutia was a little much.

It is not uncommon for evangelical books (see, Dobson, James) to ask these questions not of a random sample of individuals but of people who self-selected to their workshops, which they then treat as if they were representative. Actually collecting representative random samples is what makes Sheila’s books so good.

It also has young voters favoring Trump by a bunch, which is NOT going to happen. There is likely something wonky with the cell phone response rate.

The same poll in February showed Trump up 3 and one a year ago up by 1 so there’s some consistent systemic miss going on.